Google has released their own multifactor authentication hardware tokens: Titan Security Keys. Configuring your Google account to require the hardware keys is typically pretty straightforward but they must be added from a computer. When I was trying from my Linux system the process would always error out, just stating "something went wrong". I eventually found directions from Google that look pretty straight forward, but did not resolve the issue. Turns out a few tweaks were required.

Official Method

The initial directions as listed are as follows:

- Create a file called

/etc/udev/rules.d/titan-key.rules

- Populate the file with the following:

KERNEL=="hidraw*", SUBSYSTEM=="hidraw", ATTRS{idVendor}=="18d1", ATTRS{idProduct}=="5026", TAG+="uaccess"

- Save

- Reboot

Troubleshooting

Even after following the above, I was getting the same error. Looking more closely at the rule as configured gave me some clues as to why. Essentially, the rule as written says that when a device is inserted that has a vendorID of 18d1 and a productID of 5026, add the "uaccess" tag. This allows Chrome to communicate directly with the USB device. That is required by since FIDO U2F, the protocol in use, actually utilizes the URL when creating the challenge–response authentication request. Plugging a key in and checking dmesg reveals the issue:

[ 5866.626801] usb 1-2: new full-speed USB device number 16 using xhci_hcd

[ 5866.775955] usb 1-2: New USB device found, idVendor=096e, idProduct=0858, bcdDevice=44.00

[ 5866.775962] usb 1-2: New USB device strings: Mfr=1, Product=2, SerialNumber=0

[ 5866.775966] usb 1-2: Product: U2F

[ 5866.775969] usb 1-2: Manufacturer: FT

Checking both keys from the set I received confirmed that neither the vendor or product matches Google's documentation. This makes some sense as the keys are actually manufactured by a company called Feitian with Google's own firmware.

After looking at a few different articles I found you can configure udev rules to match multiple options in a given field. Additionally, my version of udev did not seem to recognize rules unless the file name was prefixed with a number.

Fixed Directions

- Create a file called

/etc/udev/rules.d/70-titan-key.rules

- Populate with the following:

KERNEL=="hidraw*", SUBSYSTEM=="hidraw", ATTRS{idVendor}=="18d1|096e", ATTRS{idProduct}=="5026|0858|085b", TAG+="uaccess"

- Save

- Run

sudo udevadm control --reload-rules

Provisioning the key should now work from your Linux machine.

These directions were tested and confirmed working on openSUSE Tumbleweed with udev version 237. Additional tweaking may be required for other versions of udev.

Click to read and post comments

I recently had an issue with a Linux VM that was configured with LVM. The server had run out of space several times and each time it did someone simply added a new primary partition, formatted it to LVM and extended the logical volume. This was all well and good until the 3rd time the server ran out of space. We could no longer add any more partitions to the drive. Worse, the way the layout was done gparted was having issues even extending the existing partitions. For the sake of simplicity, I decided to just reconfigure the entire partition scheme back to a single partition to ease future growth. Luckily this can be done fairly easily with logical volume mirroring.

I re-created the scenario in my lab to test possible solutions. The following ended up working perfectly. In this example there are only three existing partitions but the same process could be used for more, even if the partitions are split across multiple physical drives.

Run pvs to see the current physical volumes:

# pvs

PV VG Fmt Attr PSize PFree

/dev/sda3 lvm-vg lvm2 a-- 4.54g 0

/dev/sda4 lvm-vg lvm2 a-- 4.46g 0

/dev/sda5 lvm-vg lvm2 a-- 15.76g 0

As you can see, the volume group "lvm-vg" is split across three partitions (physical volumes or PVs) with no free space on any.

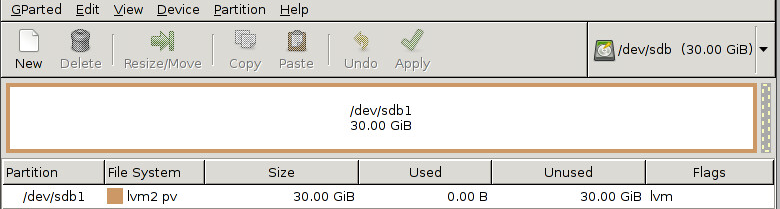

Add a second hard drive (this machine is virtual so it was trivial), and format with a single primary partition and LVM as the filesystem.

Now, back in the OS, the new disk shows up in the list of physical volumes but has not yet been added to any volume groups.

# pvs

PV VG Fmt Attr PSize PFree

/dev/sda3 lvm-vg lvm2 a-- 4.54g 0

/dev/sda4 lvm-vg lvm2 a-- 4.46g 0

/dev/sda5 lvm-vg lvm2 a-- 15.76g 0

/dev/sdb1 lvm2 a-- 30.00g 30.00g

Use vgextend to add the new physical disk to the volume group.

vgextend lvm-vg /dev/sdb1

Now to convert the volume group "lvm-vg" to a mirrored volume. The command to this is lvconvert. Because all of the free space exists only on the new physical volume, that will be used as the mirror. Note that this will have to be done for each logical volume under the volume group. In my case, the root file system and swap. Each step takes quite a while as all data is mirrored.

lvconvert -m 1 /dev/lvm-vg/root

lvconvert -m 1 /dev/lvm-vg/swap_1

Once the mirror has been established, use lvconvert with with the -m 0 option, specifying which partitions are to be removed.

lvconvert -m 0 /dev/lvm-vg/root /dev/sda3 /dev/sda4 /dev/sda5

lvconvert -m 0 /dev/lvm-vg/swap_1 /dev/sda3 /dev/sda4 /dev/sda5

The output of pvs should now show the free space on the partitions that were removed.

# pvs

PV VG Fmt Attr PSize PFree

/dev/sda3 lvm-vg lvm2 a-- 4.54g 4.54g

/dev/sda4 lvm-vg lvm2 a-- 4.46g 4.46g

/dev/sda5 lvm-vg lvm2 a-- 15.76g 15.76g

/dev/sdb1 lvm-vg lvm2 a-- 30.00g 5.24g

Now remove each of the partitions from the volume group entirely with vgreduce.

vgreduce lvm-vg /dev/sda3

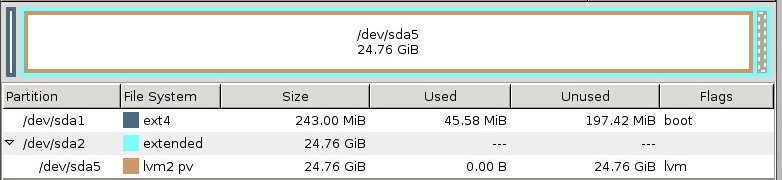

Re-partition the first disk in a more sane fashion. I did it in a gparted to be safe but everything could be done from fdisk within the live operating system. In the end, I ended up with an extended partition with a lvm formatted logical volume beneath it.

After the primary drive is done being partitioned, repeat the process to copy everything back to that volume then remove the staging drive. Now the system is on a nice continuous partition on a single drive that can be managed more easily moving forward. Make sure to resize the root filesystem once its in its final home.

Some pages that led me in the right direction:

-

Server Fault post

-

LVM Cluster on CentOS

Click to read and post comments